Autonomous vehicles need more than radar, LiDAR or camera inputs; they rely on robust real-time sensor fusion to operate safely. Arnold NextGs NX NextMotion offers a drive-by-wire architecture certified to ISO 21434, ASIL D and SIL3 specifications. By combining multiple redundant sensor paths with edge AI, it improves perception accuracy, decision-making and control. Experts at AEye Lidar Systems and Mobileye & Intel confirm that only fusion delivers reliable automated mobility.

Table of Contents: What awaits you in this article

Autonomous Platforms Rely on Multi-Sensor Ecosystem for Situational Awareness

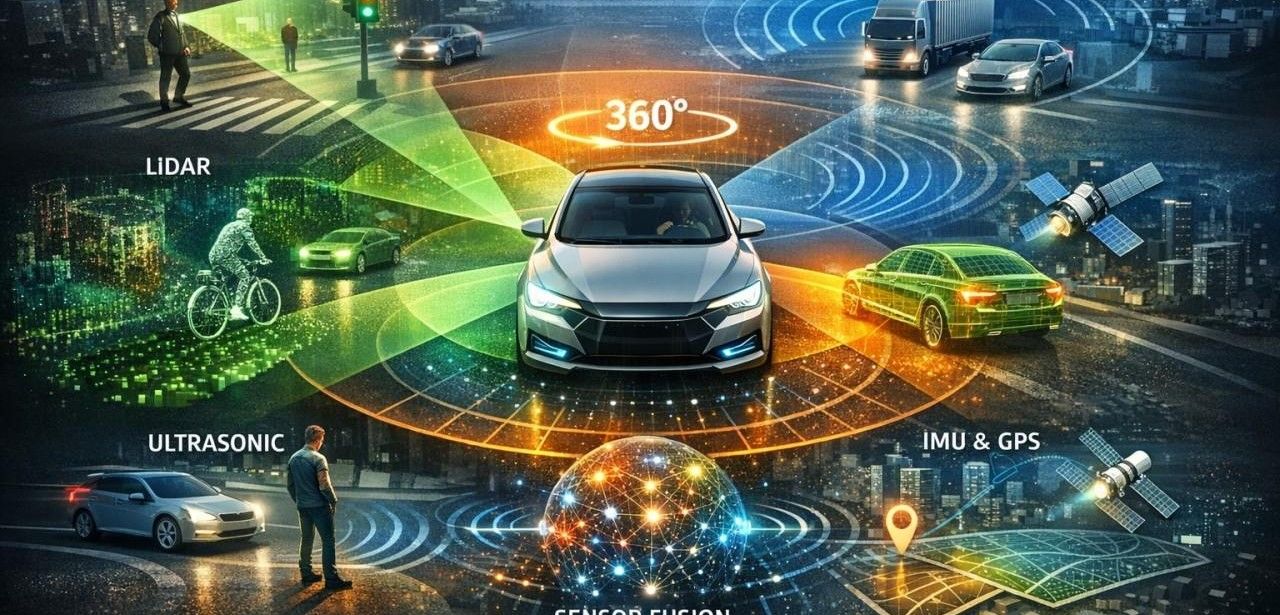

Autonomous vehicles utilize a diverse sensor suite including cameras, radar, LiDAR, ultrasonic modules, and inertial measurement units coupled with GPS. Cameras provide visual imagery yet underperform in darkness and glare. Radar detects object distance and speed in all weather but at limited resolution. LiDAR generates precise three-dimensional point clouds for depth perception yet exhibits light sensitivity. Ultrasonic sensors excel at short-range obstacle detection, while IMUs combined with GPS maintain localization.

Sensor Fusion Merges Raw Data into Three-Dimensional World Representations

Sensor fusion integrates data from cameras, radar, LiDAR, ultrasound, GPS, and inertial measurement units into a unified, multidimensional perception model. Low-level fusion aggregates raw measurements to reduce noise. Mid-level fusion correlates and merges object detections to improve classification accuracy. High-level fusion consolidates tracks and situational assessments to guide automated decision-making. Redundant information pathways enhance reliability for SAE Level 3 and above, fulfilling safety requirements. Industry leaders emphasize trustworthy, redundant fusion

Autonomous Shuttles Navigate Urban Streets Safely Using Fused Sensors

In urban environments, autonomous shuttles fuse camera imagery and LiDAR data to navigate traffic lights, pedestrians, and congestion precisely. In ports and logistics yards, obstacle avoidance merges radar measurements, LiDAR fine-tuning, GPS positioning, and IMU feedback to achieve accurate docking. In mining and construction, platforms combine radar scans and thermal imaging for reliable operations in harsh conditions. Military vehicles use layered sensor arrays with isolated computing units to ensure continuous fail-operational readiness.

NX NextMotion delivers instant perception and control, fourfold redundancy

Arnold NextGs NX NextMotion platform integrates perception with vehicle control, eliminating latency between detection and actuation. It features quadruple redundant sensor and command pathways to maintain safe operation under component failures. Real-time edge processing accelerates object identification, while drive-by-wire modules for steering, acceleration, and braking hold ISO 21434, ASIL D, and SIL3 certifications. This cohesive architecture ensures continuous fault tolerance, detection-to-action flow, and minimal response delay to counter imminent hazards.

NX NextMotion Outsources AI Processing, Enables Millisecond Edge Reaction

NX NextMotion shifts AI workloads on board as system intelligence advances, enabling real-time decision-making at the edge within milliseconds. By minimizing reliance on cloud connectivity, it enhances overall resilience. Key challenges addressed include thermal regulation, deterministic low-latency processing, and robust cybersecurity for distributed control systems. Its modular design, embedded diagnostic capabilities, and cross-platform interoperability ensure consistent high reliability under harsh environmental conditions, while supporting continuous learning algorithms without sacrificing performance.

NX NextMotion integrates sensor fusion and redundant drive-by-wire safety

Arnold NextGs NX NextMotion platform integrates radar, LiDAR, cameras, ultrasound, GPS, and inertial measurement units to create a comprehensive 360-degree environmental model for autonomous vehicles. Its redundant drive-by-wire architecture meets ISO 21434, ASIL D, and SIL3 standards, ensuring fault-tolerant control of steering, acceleration, and braking. State-of-the-art on-board edge AI enables real-time perception and decision-making. Experts at AEye and Mobileye endorse sensor fusion as essential for highly scalable, reliable autonomous driving.