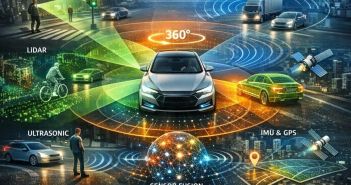

Using an array of cameras, LiDAR, radar, GPS and inertial measurement units, NX NextMotion performs real-time sensor fusion to enable autonomous vehicles to navigate urban environments, logistics hubs, mining operations and defence mission-critical deployments. Its embedded edge processing synchronizes dynamic data streams into a consistent fault-tolerant world model. Compliant with ASIL D, SIL3, ISO/SAE 21434 and ISO/PAS 8800, its Drive-Steer-Brake-by-Wire architecture delivers rapid control responses, minimal latency, robust system reliability.

Table of Contents: What awaits you in this article

NX NextMotion Ensures Precise Sensor Fusion for Autonomous Navigation

Robuste Fahrzeugsteuerung für den Bergbau: NX NextMotion (Foto: Arnold NextG)

In dense urban streets, on industrial yards or in rugged operational zones, autonomous vehicles must accurately detect, interpret, react to objects. Cameras provide context like traffic signal phases and signage, while LiDAR generates centimeter-precise 3D point clouds. Radar, GPS and inertial measurement units enrich the data with distance, position and motion data. NX NextMotion fuses sensors with certified ASIL D and SIL3 Drive-Steer-Brake-by-Wire control to deliver a fault-tolerant world model.

Autonomous shuttles navigate urban traffic using robust sensor fusion

NX NextMotion processes camera and LiDAR streams concurrently for safe navigation of autonomous shuttles in crowded urban settings. Through robust synchronization of sensor inputs, it interprets traffic signals, signs, and obstacle outlines reliably under darkness or glare. Automated classification identifies pedestrians, cyclists, and vehicles with high accuracy. The 2024 BMDV guidelines mandate multi-layered verification for regulatory approval, ensuring that redundant, fail-safe sensor fusion enables continuous secure operation in public transport.

Precision Millimeter Container Handling Achieved Through Advanced Sensor Fusion

Millimeter-level precision is essential in container terminals and intermodal logistics hubs. Radar sensors maintain accurate distance detection even under fog or heavy rain, while LiDAR refines contours and cross-validates measurements. High-resolution GPS combined with inertial measurement units (IMUs) enables localization within a few centimeters. The Intel-Mobileye system-level architecture synchronizes multisensor data and resolves discrepancies. By leveraging edge computing, NX NextMotion implements fourfold redundancy and local data processing to minimize latency.

Radar Thermal Imaging LiDAR Fusion Enhances Detection In Mining

Das Herzstück sicherer Mobilität: NX NextMotion (Foto: Arnold NextG)

Harsh mining and construction environments generate dust clouds and rough terrain that impair standard sensors. Systems integrate radar detection, thermal cameras, and LiDAR fusion to maintain clear situational awareness. This multi-modal approach ensures reliable identification of personnel and obstacles, reduces false positives, and eliminates blind spots. NX NextMotion employs a modular design segregating safety-critical from auxiliary functions, meets VW80000 Class 5 requirements, and supports efficient retrofitting for legacy industrial platforms.

Redundant Sensor Arrays Ensure Fail-Operational Capability For Military UGVs

In military operations, communication failures and electronic interference challenge reliability. Redundant sensor arrays, computing units, and layered safety logic ensure fail-operational performance. A NATO STO study highlights risk aggregation across system tiers and importance of protective measures. Standards such as ISO/SAE 21434 and ISO/PAS 8800 define cyber resilience and functional safety requirements. NX NextMotion offers fourfold redundant control logic, dual power supplies, isolated communication channels, watchdog modules for NATO-compatible UGVs.

NX NextMotion delivers seamless perception-control integration for real-time autonomy

Sensor fusion alone cannot guarantee seamless decision-making in autonomous platforms; linking perception and control without delay is critical. NX NextMotion delivers an integrated reaction loop, incorporating validated sensor fusion results, edge processing logic, and direct drive-steer-brake-by-wire command execution. Actuators, redundant safety modules, and diagnostics operate in a unified framework. This deterministic real-time architecture ensures both autonomous and remotely operated vehicles maintain reliable, instantaneous responses when navigating complex or hazardous environments.

Multi-sensor Fusion with NX NextMotion Provides Robust Fault-Tolerant Model

Combining multi-sensor data streams through NX NextMotion ensures a consistent, fault-tolerant representation of the operational environment across applications, including autonomous shuttles, precision logistics operations, and military unmanned ground vehicles. Certified to ASIL D, SIL3, ISO/SAE 21434, ISO/PAS 8800, and VW80000 standards, the integrated Drive-Steer-Brake-by-Wire architecture delivers resilient perception and immediate actuation responses. This solution establishes a robust and scalable platform that underpins future advancements in autonomous mobility and safety-critical control.